The absolute number one request I get from clients rolling out GenAI assistance to their developers is:

I want to know how much code is generated by GenAI, by team, and even by person sometimes

I think it’s a very bad idea, for a number of reasons. Here is why.

It takes unnecessary effort

You want to know how much GenAI code is introduced in your codebase? No problem, it’s technically easy: if you want deterministic watermarking in your code (so not just relying on the involuntary patterns your LLM leaves behind), you will find a significant number of academic papers talking about how to add invisible signatures to your code, so you can then use a tool to detect said signatures and report against them. You can already find commercial platforms doing that on your behalf, for a fee.

You will obviously need to setup runtime automations in your CI pipelines, as well as dashboards, reports, ingestion into a data platform (?) and… you will still fail. Why?

Think about this: a developer starts writing code and they accept an autocompletion suggestion from GitHub Copilot. Do you:

- consider that line of code AI-generated?

- discount what the developer did? The suggestion might have been is so marginal it barely makes a dent in the code generated.

What if a developer amends GenAI-generated code? Does it count against your metric or not?

As you can see it’s a rabbithole you have no benefit from.

It dents developers’ confidence

Let’s say you gave up on the idea of running these tools and you just want a surface-level idea of how much code is generated. Lightweight, little effort for reporting. You end up adding a custom instruction to your repos along the lines of this:

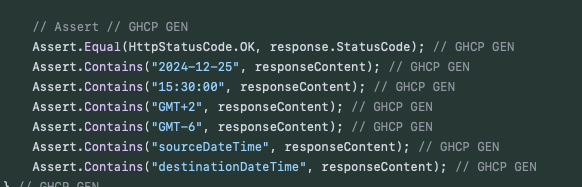

Congratulations! You will have a bunch of lines watermarked:

Now, assume your developers need to work on these lines of code. Do they need to manually remove the GHCP GEN comment every time?

If so, it will cause a loss of productivity at a minimum, without thinking about how they will be incentivised in cheating the system, either by adding extra code themselves with the comment or actively avoiding to show usage of GenAI acceleration.

Doing this causes a significant loss of trust for developers, and it will have negative effects on developer productivity across the board. Is it really worth it?

Focus on the best practices

There is no substitute for DORA metrics, it’s that simple. You can derive your own, based on DORA’s and adapted to the state of the organisation you work in, and add forms of active measurement around the SPACE framework, however you will always end up gravitating around them because it’s the most non-disruptive and accurate measurement you can use for your teams.